With the release of the Nikon Z6 and Z7, Nikon shooters at long last have a way to add stabilization to unstabilized lenses. This presents some nifty opportunities - a decade's worth of fast AF-S primes are now all stabilized, and some very desirable zooms such as the 14-24 and the Sigma 24-35 ART also gain stabilization.

Much more interestingly, the original AF-S supertelephotos all gain stabilization. The VR versions command a $2000 premium over their unstabilized counterparts, so clearly there are substantial (one Z6 per lens!) savings to be had here. The situation is not as magical as it first seems though - small angular motions transform into huge shifts at the sensor, so in-body stabilization is not as effective for long lenses as lens-based stabilization.

I don't have a Z-series camera, but I do own an A7ii (which has a very similar sensor resolution and stabilization system) and an AF-S 500mm f4, and had been contemplating a Z6, so I was interested in testing the effectiveness of IBIS when used with really long lenses.

Testing stabilization is a little tricky, because there is inherently a human factor involved (some people are really good at keeping cameras stable, some less so). For these tests, I settled on a compromise which I felt would be representative of my shooting situations:

- Lens and camera mounted on a gimbal head on a tripod - I think a setup like this will always have some kind of support underneath it, be it tripod or monopod; other than maybe the 500FL no one is going to be handholding a big supertelephoto prime for very long.

- Gimbal head locks loosened - if I'm shooting with a long lens, I'm probably also following something that moves. Realistically, the scenario in this test would only show up for slow-moving wildlife or portraiture; any real "action" will require 1/500 or faster anyway to stop subject motion.

- Camera triggered by pressing shutter button - in the same line of reasoning as above, I wanted to be able to keep my hand on the grip at all times.

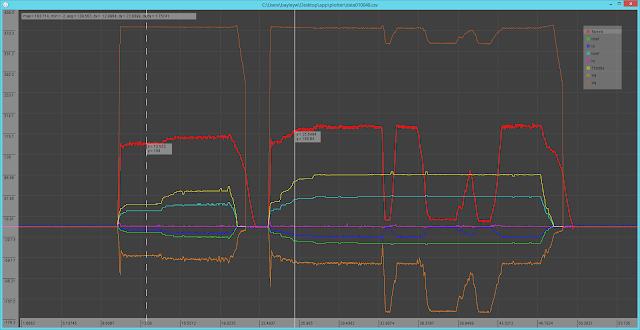

Results

Blogger is not really set up for hosting huge images, so the test results are externally hosted here. 100.png, 200.png, 400.png, and 800.png are, respectively, 1/100, 1/200, 1/400, and 1/800 shutter speeds without stabilization; is100.png, is200.png, is400.png, and is800.png are the same speeds with stabilization.

Analysis

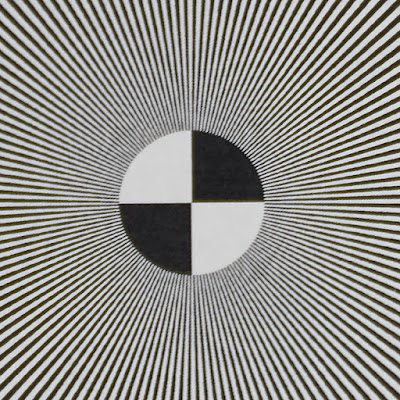

IBIS is effective, even for very long focal lengths. At 1/100 for example, the worst frame (out of 4) with IS off looked like this:

Completely unusable, by most standards. In contrast, the worst frame with IS on looked like this:

Still a bit soft, but this would be usable for smaller output sizes, especially with some careful postprocessing.

Stabilization also helps, but much less visibly, at 1/200:

Off:

On:

However, stabilization is not magic. While the 1/100 shots with IS on are usable, they are still not quite as sharp as a 1/800 image:

The 1/200 shots get pretty close, but are still a bit blurrier (the difference would likely not be perceptible with a softer lens).

Conclusion:

What did we learn? Well, it seems for at least one shooting scenario (lens supported but not completely locked down), IBIS does make a difference, allowing for at least 2 stops of stabilization. Anecdotally at least, this puts it on par with lens-based stabilization. It's a little hard to tell - lens-based stabilization is supposed to be good for 3+ stops, but there's precious little subject matter which needs a big telephoto prime and moves slowly enough to be shot at 1/30.

We also learned that fast shutter speeds are necessary to extract maximum performance from a telephoto prime. While sensor-based stabilization allows for usable shots at slow shutter speeds, reliably achieving the maximum optical performance of the lens still requires 1/(focal length) or shorter exposures.

The other question is how much more stable the viewfinder image is with IBIS. There are some scenarios where it is possible to shoot handheld, at least for a little while, and having IS is quite useful for framing purposes. Unfortunately, this is much harder to test, and I expect the answer to be quite negative, given how much the viewfinder image moves.